Ready to launch your own podcast? Book a strategy call.

Frontlines.io | Where B2B Founders Talk GTM.

Strategic Communications Advisory For Visionary Founders

Conversation

Highlights

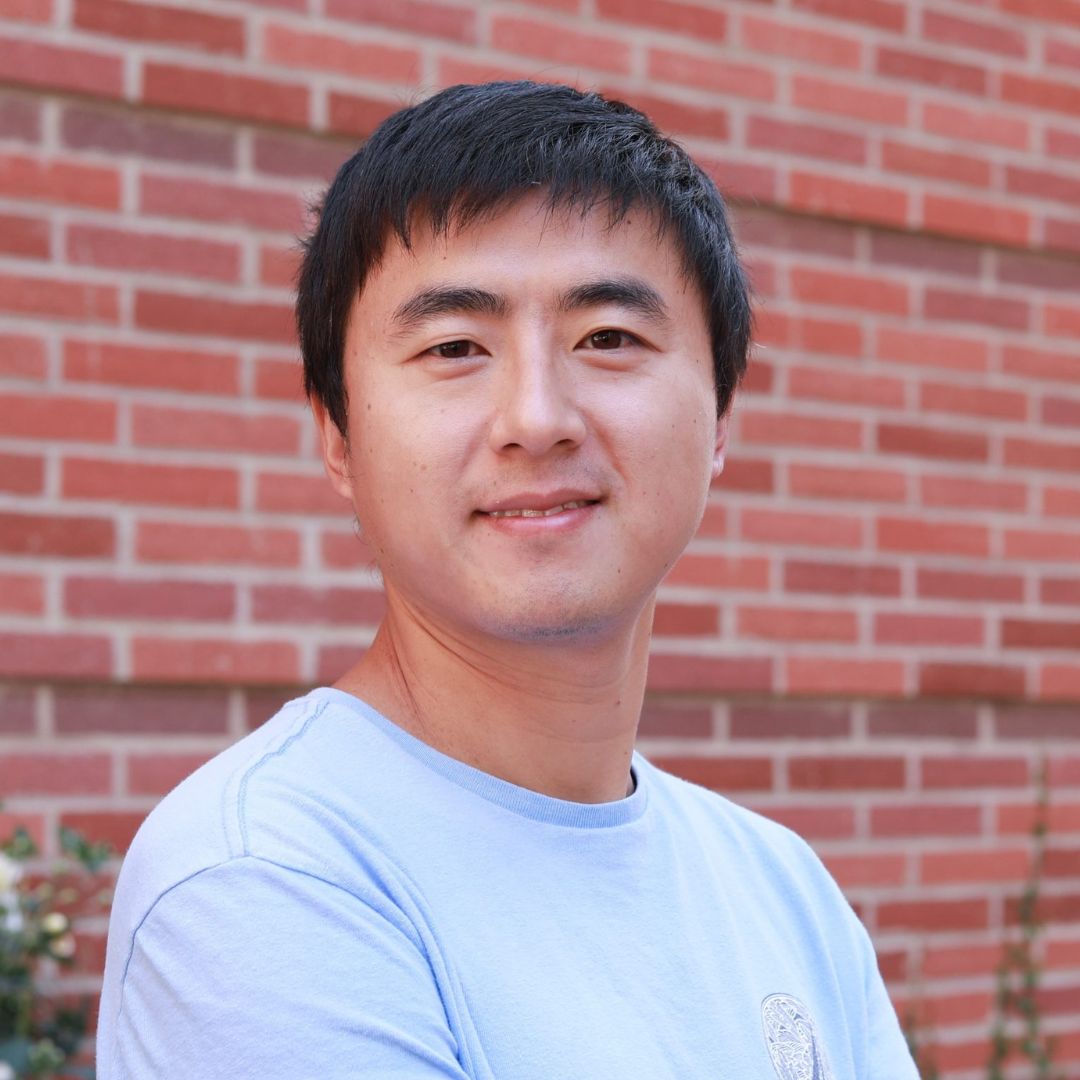

Beyond the AI Hype: How Contextual AI is Building Enterprise-Ready Language Models

The distance between an impressive AI demo and a production-ready enterprise solution is vast. In a recent episode of Category Visionaries, Douwe Kiela, CEO of Contextual AI, revealed how his company is bridging this gap by building the next generation of language models specifically designed for enterprise deployment.

The Problem with Current Language Models

While large language models have captured mainstream attention, their enterprise adoption faces significant hurdles. “Everybody can see that they’re going to change the world,” Douwe explains, “but at the same time, there’s a lot of frustration, I think, especially in enterprises where you can build very nice demos, but to get these models to actually be production grade, so enterprise grade for a production use case, that requires a lot more work.”

The challenges are multifaceted: hallucination, attribution issues, data privacy concerns, and prohibitive costs. Instead of tackling these problems individually, Contextual AI is taking a fundamentally different approach. As Douwe notes, “You can solve these problems one by one. That’s what our competitors are doing. Or you can try to really go back to the drawing board and try to design a better next generation of language models that overcomes these issues all in one go.”

A Product-Led Go-to-Market Strategy

What’s particularly interesting about Contextual AI’s go-to-market approach is their focus on letting the market pull them in the right direction. “We’re in a very fortunate position where we’re basically not doing any outreach and folks are coming to us with their problems,” Douwe shares. This organic traction comes from Fortune 500 companies who’ve tried demos but couldn’t productionize them.

This market-pull approach has helped them identify their ideal customers: “The most tech forward companies who already know exactly these are the top ten use cases that we’re most interested in. We’re not going to put all of our eggs in one basket… and really have a strategy in place for what they’re trying to achieve.”

Leveraging Open Source to Accelerate Development

A pivotal moment in Contextual AI’s journey came with the release of Meta’s Llama models. Initially, the company thought they would need to train large language models from scratch. However, they discovered a more efficient path: “When it comes to the bigger scales of more billions of parameters in the language models, we can leverage open source models, which are a very good starting point and kind of contextualize those rather than having to train our entire system at that scale from scratch,” Douwe explains.

Navigating the AI Hype Cycle

Looking ahead to 2024, Douwe anticipates a significant market correction: “This hype train is going to stop at some point and so the tide is going to run out and a bunch of people are going to get caught swimming naked.” Rather than riding the hype wave, Contextual AI is focused on building sustainable technology that delivers concrete value to enterprises.

Their approach centers on specialized intelligence rather than chasing artificial general intelligence (AGI). “Where I think the real solution lies is in much more specialized solutions,” Douwe emphasizes. “If they have to do this repetitive thing over and over again, what if they can outsource that to their AI coworker and they are almost like their own CEO of their little team of AI kind of assistants?”

The Vision: Transforming Enterprise Work

Contextual AI’s ultimate goal extends beyond just improving language models. “What we really want to do is we want to change the way the world works, literally,” Douwe states. Their vision is to become the go-to enterprise language model platform, enabling workers to become “their own CEOs of their own little groups and teams of coworkers.”

For founders building in the AI space, Contextual AI’s journey offers valuable lessons: focus on solving real enterprise problems, let market demand guide your direction, and build for the long term beyond the current hype cycle. As Douwe puts it, “The most disruptive place for this technology, I think, will be in the workplace. And so that’s exactly where we want to be.”

Actionable

Takeaways

Learn from Analogous Industries:

Douwe draws lessons from the browser and Linux kernel when thinking about the appropriate level of regulation for AI models, arguing that the focus should be on regulating applications rather than the underlying technology itself. Founders should look to similar industries for insights on how to navigate complex regulatory landscapes.

Leverage Open-Source Models to Accelerate Progress:

By building on top of open-source models like Meta's LLaMA, Contextual AI was able to focus on contextualizing and fine-tuning rather than training from scratch, accelerating their time to market. Founders should actively seek out opportunities to collaborate with and build upon open-source initiatives in their respective fields.

Focus on Tech-Forward Early Adopters:

When faced with a broad potential market, Douwe recommends prioritizing companies that have a clear understanding of their AI use cases, success criteria, and overall strategy. These tech-forward early adopters are more likely to be receptive to innovative solutions and can serve as valuable design partners and references.

Cultivate a Strong Team and Investor Network:

Contextual AI's inbound interest from Fortune 500 companies is largely driven by the strength of their team's pedigree and the connections provided by their investor network. Founders should invest in building a team with deep domain expertise and seek out investors who can provide strategic introductions and support.

Stay True to Yourself in Fundraising:

Douwe advises founders to be authentic and self-aware in the fundraising process, rather than exaggerating or bullshitting their way through difficult questions. By staying close to one's own strengths and being honest about areas for improvement, founders can maximize their chances of successful fundraising on their own terms.